Improving Prompt Engineering for Copilot: Content Creation, Code Development, and More

As generative Artificial Intelligence (AI) becomes more commonplace, businesses are leveraging them for content creation, code development, image creation, and process automation.

Microsoft is continuing to add AI capabilities and development tools to Business Central. For example, Microsoft has partnered with OpenAI, the makers of ChatGPT, to make generative AI available for Business Central with Microsoft’s Azure OpenAI Service. The Azure OpenAI Service allows access to several of OpenAI’s advanced AI models, including GPT-4, GPT-3, Codex, and DALL-E.

In addition, Microsoft’s Copilot, built on the same OpenAI framework as ChatGPT, assists developers in a number of Microsoft applications, from creating automations and apps in the Power Platform to streamlining coding tasks in GitHub.

While these tools excel at accomplishing these types of tasks, one aspect is often overlooked: prompt engineering.

What is Copilot prompt engineering?

If you regularly use search engines like Google or Bing, you probably know that your results can vary depending on how you enter your search query.

In the same way, how you structure your prompts will significantly impact the response you get from generative AI products like ChatGPT, DALL-E, and Copilot. Generative AI tools use Large Language Models (LLMs) to compile large data sets from which they can learn from millions of example data points.

As the user makes a request (or prompt), the Generative AI system interprets the request, reviews its collection of examples, and generates an appropriate response. The prompts are broken down into “tokens,” small snippets of words containing roughly four characters (as a rule of thumb, 100 tokens is approximately 75 words). The system then uses Natural Language Processing (NLP) to understand the prompt and provide its response in a natural tone and style. In other words, it uses libraries and dictionaries to understand the prompt and respond like a human with the appropriate tone and style for the request. The user can help the system tailor its responses by fine-tuning their prompts by providing alternate phrasing, adding guidance for tone and focus, and including parameters to optimize the responses.

The science of creating optimal prompts to elicit the most beneficial responses is called Prompt Engineering.

Tips for optimizing prompts

Several techniques can help you get the most from your AI sessions, such as:

- Be clear and concise – Avoid vague requests, which can result in inadequate or irrelevant results.

- Provide context – Include background information on the topic, the intended audience, and the purpose of the request. For example, identify whether the result should be written for grade school students or business professionals.

- Specify tone and format – Identify how the result should appear: as a blog, report, or email.

You can even prompt to have the response written in a particular style. For a fun exercise, try asking ChatGPT to write your resume in the style of William Shakespeare.

Crafting effective prompts

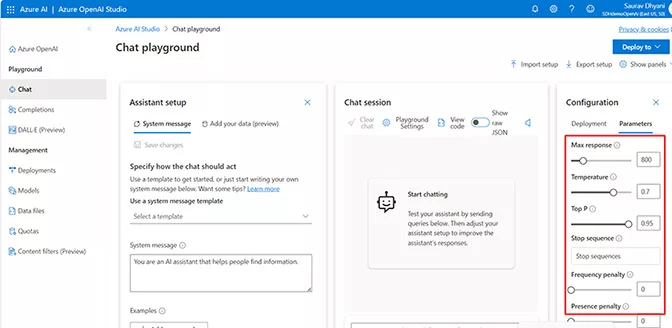

In addition, there are advanced parameters you can add to your prompts to refine the responses, including:

- Temperature (temperature) – The temperature parameter controls randomness in the response. Values greater than 0.5 produce more creative results, appropriate for creative writing and brainstorming, while values less than 0.5 will produce more focused results, suitable for technical articles.

- Top-k (top_k) – Like temperature, top-k values can help increase or decrease variance in the results. The top-k parameter will tell the model to limit its results to the most probable tokens (words, for simplicity) in its results. Larger values are more creative, while smaller values are more focused.

- Presence Penalty (presence_penalty) – The presence penalty parameter controls the occurrence of words or phrases in the response to avoid the appearance of bias. Values below 0.5 ensure the response uses words and phrases in the input prompt (example: presence_penalty=0.8). Values above 0.5 will be less constrained, allowing new terms and concepts to be included in the response.

- Frequency Penalty (frequency_penalty) – The frequency penalty controls whether words or phrases are repeated. Values above 0.5 reduce the possibility of a specific word or phrase appearing repeatedly (example: frequency_penalty=0.9). Values below 0.1 permit greater word reuse but may sound redundant.

These and other advanced parameters are available in the Configuration settings for Azure OpenAI Studio.

Practical uses of prompt engineering

Developers using AI can benefit by understanding how AI models are generated and trained using globally accepted data, including linguistics (the study of language), sentence structure, and correct grammar. Knowing how the model is being trained drives how you should construct your prompts.

Developers should experiment and hone their skills in prompt engineering to learn how to use generative AI effectively. For example, to have Copilot generate an email for customers and vendors, developers need to understand what prompt to provide for the AI to respond with appropriate language, including telling it to “write an email to a business vendor in 50 words”.

Prompt engineering applies not only to text responses (email, reports, blogs, etc.) but also to many other areas. For example, using Copilot in Visual Studio can help write code frameworks, produce comments and documentation, explain the purpose of functions, and even emulate the developer’s coding style.

The Azure AI Studio lets developers add their data and leverage sophisticated tools while maintaining data privacy.

For practical examples of prompt engineering, Microsoft.com recently published an article showing how several Microsoft executives shared the prompts they use to get the information they need from Copilot.

Now hiring: Prompt Engineers!

Demand for developers with strong prompt engineering skills is heating up.

McKinsey states, “Organizations are already beginning to make changes to their hiring practices that reflect their generative AI ambitions.”

Microsoft says prompt engineering is “a skill that’s in high demand as more organizations adopt LLM AI models to automate tasks and improve productivity.”

What makes a good prompt engineer?

IBM recommends, “Prompt engineers will need a deep understanding of vocabulary, nuance, phrasing, context, and linguistics because every word in a prompt can influence the outcome. Prompt engineers should also know how to effectively convey the necessary context, instructions, content, or data to the AI model. If the goal is to generate code, a prompt engineer must understand coding principles and programming languages. Those working with image generators should know art history, photography, and film terms. Those generating language context may need to know various narrative styles or literary theories.”

Find out more

There is so much more to say! This blog only touches the surface.

To dive deeper, watch Hey ChatGPT, Talk to Me! Business Central Integration Unleashed to learn more about how parameters can help you develop better business solutions in Business Central. For help in learning how ChatGPT can help you craft more effective emails, watch Effortless Email Body Writing with ChatGPT for Business Central.

And be sure to contact ArcherPoint to learn how to get the most out of the AI capabilities in Business Central.